How has the explosion of AI impacted Google’s emphasis on E-E-A-T?

Last December, Google introduced a noteworthy update to its E-A-T (Expertise, Authoritativeness, Trustworthiness) guidelines, adding an additional ‘E’ for “experience”.

Google has always been (rightly) very strict with its quality rating of healthcare information, treating information that could impact someones health or financial wellbeing (YMYL) very differently to content from other industries.

At Medico, our interpretation of Google’s rules has always been that they want to ensure healthcare content is not being written by faceless organisations (and now, faceless, generative AI!), but that the person writing about a topic is qualified to write about it (this is why we have spent the majority of the last decade assembling a best in class team of medical copywriters from a wide range of medical disciplines, and have evolved our content production processes to meet the most stringent MLR review standards.). To us, the additional “E” was simply Google reaffirming this truth. You must have experience in what you’re writing about.

This begs the question, can AI-driven content ever meet the quality guidelines put in place by Google?

In short, not yet.

Are healthcare companies using AI to write content?

Right now, companies are using AI to generate an enormous volume of content to game the system and make ground in organic rankings. For some, it’s working.

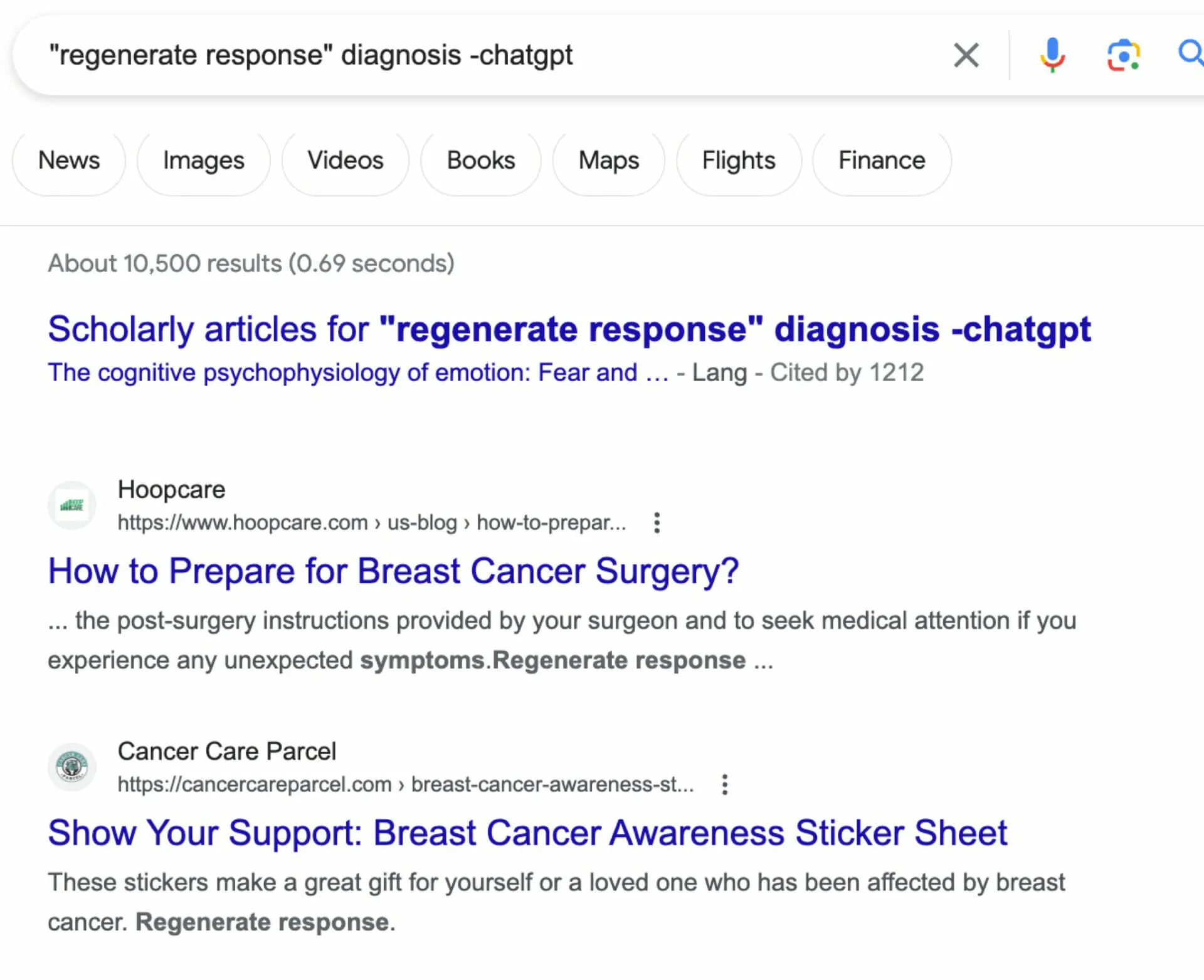

A quick search for “regenerate response” (the ever present command at the bottom of any Chat GPT output) shows you that within the healthcare space, 1000’s of content producers are wearing out the “c” and “v” buttons on their keyboards, giving no time to editing, updating or even proofreading the content that’s being churned out.

In a low-risk industry like fashion, more power to those people, but when it comes to healthcare this is setting a dangerous precedent.

Why is it dangerous to use AI for healthcare content?

Medical content needs to satisfy many objectives. When writing for patients, it needs to communicate information in an accessible way, without patronising the intelligence of the reader. When writing for HCPs the content needs to be stimulating while talking about potentially technical subjects, treading a fine line between delivering something new and valuable without taking inherent knowledge for granted.

But no matter the use case, it’s imperative that it’s accurate!

This is where it’s easy to see how ChatGPT’s current limitations could be extremely damaging.

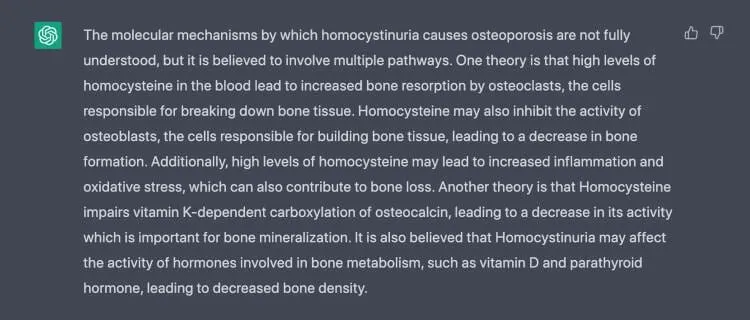

To illustrate this take a look at the following study Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. The writers asked ChatGPT for information about a specific type of osteoporosis, to a layman (me) the answer looks impressive and accurate. But under the scrutiny of a subject matter expert, the information was completely wrong.

We asked ChatGPT to explain these findings further and provide references to fact-check the presumed “homocysteine-vitamin K-osteocalcin” axis in osteoporosis (Figure

2). Hence, it provided five reference dating to the early 2000s. None of the provided paper titles existed, and all provided PubMed IDs (PMIDs) were of different unrelated papersArtificial Hallucinations in ChatGPT: Implications in Scientific Writing - Hussam Alkaissi and Samy I McFarlane

So how can content producers in the healthcare industry take advantage of this game changing technology?

The answer is: with care and caution.

How should the medical communications industry be using AI?

At Medico we’re using AI, we would be crazy not to.

Every week we’re experimenting with new use cases, but we’re not allowing anything to move into our workflow as it pertains to clients until it’s been vigorously tested and unanimously signed off. We want to make things faster and pass on cost savings to clients, but not to the detriment of quality.

When it comes to content writing, fact checking and proof-reading, we’re not ready to trust the AI. Not only do we believe that there are large ethical consequences from leaving potential mistakes in medical content that could impact someone’s health, but also in our duty to our customers.

Although Google doesn’t seem to penalise people for using AI-generated content right now, in 2011 Google wasn’t penalising websites that were using black hat link-building techniques to get ahead, either.

Example of ranking drop off due to the Penguin algorithm update from a Sistrix example website.

When the Penguin algorithm update was released in 2012, websites with strong organic traffic fell out of the top 10 pages on Google overnight, the changes impacted over 100 million search queries. Huge amounts of investment in SEO were lost overnight and companies had to spend big to try and regain their losses as well.

It’s too early to say whether a Penguin style update will come for AI generated content any time soon, but at Medico we feel like the risks and downsides of leaning heavily on AI for content production are far too high at this stage, and we urge anyone using AI generated content in the healthcare sphere to reconsider.

In content production, you are what you E-E-A-T. We want content to come from medical writers with experience, experts in their field with the ability to channel their authority into their writing and promote trust.

As soon as OpenAI works out how to do that, we’ll gladly make use of it.